Running Hadoop on cygwin in windows (Single-Node Cluster)

In this document you are going to see how you can setup pseudo-distributed, single-node Hadoop (any stable version 1.0.X) cluster backed by the Hadoop Distributed File System, running on windows ( I am using Windows VISTA). Run your Hadoop cluster through 10 steps

Pre-request

Software’s to be downloaded before you start these procedures. You can download all the recommended software’s before you get started with the steps listed below

Single-node Hadoop cluster step by step instruction

1. Installing Cygwin

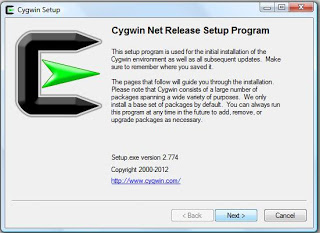

a) Cygwin comes with a normal setup.exe to install in Windows, but there are a few steps you need to pay attention, I would like to walk you through the step by step installation. Click her to download Cygwin setup

b)Once you start installing the first screen which appears this

SSH Installation

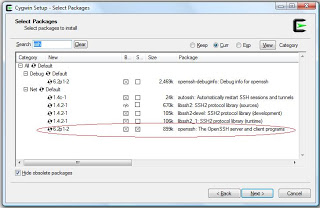

c) After 4 steps from the above screen you will be getting a screen to select packages, in this step you can choose OpenSSH installation along with Cygwin

d) Cygwin installer proceeds with including all dependent packages which are required for the installation.

Now you installed Cygwin with OpenSSH2. Set Environment Variable in Window

a) Find “My Computer” icon either on the desktop, right-click on it and select Properties item from the menu.

b) When you see the Properties dialog box, click on the Environment Variables button which you see under the Advance Tab.

c) When you click Environment Variables dialog shows up, click on the Path variable located in the System Variables box and then click the Edit button.

d) Edit dialog appears append you cygwin path end of the Variable value field

(I installed Cygwin under C: drive – c:\cygwin\bin;)

Now you are down with Cygwin environmental setup

3. Setup SSH daemona) Open the Cygwin command prompt.

b) Execute the following command:

$ ssh-host-config

c) When asked if privilege separation should be used, answer no.

d) When asked if sshd should be installed as a service, answer yes.

(If it prompts with CYGWIN environment variable, enter ntsec)

4. Start SSH daemon

a) Find My Computer icon either on your desktop, right-click on it and select Manage from the context menu.

b) Open Services and Applications in the left-hand panel then select the Servicesitem.

c) Find the CYGWIN sshd item in the main section and right-click on it.

d) On the property popup you can select “Start up :” Automatic. So that it will start up when windows starts

5. Setup authorization keys

a) Open Cygwin Terminal and exectute the command

$ ssh-keygen

(Since we are generating keys without password, so press enter. Below is the sequence of text which appears in the terminal prompt)

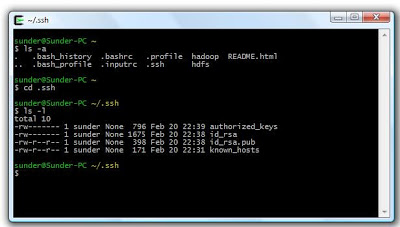

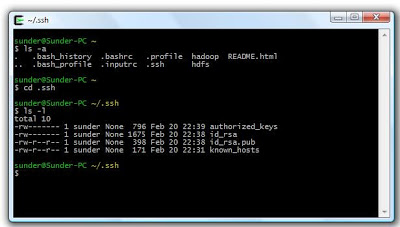

b) Once the command completed generating the key

$ cd ~/.ssh

(.ssh folder will be under $ <user> directory, eg:- please find he screen shot below .ssh is under my user profile installed in my system is “sunder”)  c) Next step is to create an RSA key pair with an empty password. You have to enable SSH access to your local machine with this newly created key.

c) Next step is to create an RSA key pair with an empty password. You have to enable SSH access to your local machine with this newly created key.

$ cat id_rsa.pub >> authorized_keys

Now you created RSA key pair

To test SSH installed, from a terminal prompt enter:

$ ssh localhost

( You will get a similar notification in the terminal)

|

|

$ ssh localhost

|

|

2

|

Last login: Mon Apr 8 21:36:45 2013 from sunder-pc

|

Now you SSH successfully running with keys generated

6. JAVA Installation

a) Installing JAVA in windows system is a easy step up step process

c) Choose your JAVA installation folder (eg :- C:\Java\jdk1.6.0_41) and install JAVA

Now you successfully installed JAVA

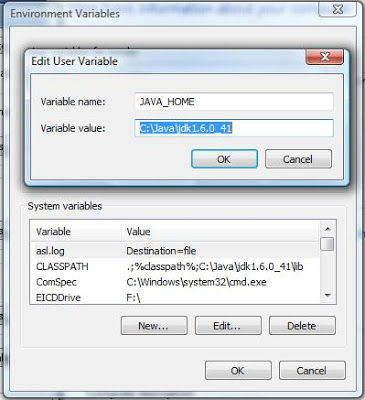

7. Setting JAVA_HOME in Windows

a) Set environmental variable for JAVA_HOME, as we already did for Cygwin in the above instruction – the same steps to be followed for setting JAVA_HOME

b) You may need to create a new variable under the User Variable / System Variable. Please find the reference screen shot below

8. Setting JAVA_HOME in Cygwin

a. To set JAVA_HOME in Cgwin have to update Java home directory in /etc/bash.bashrc

b. edit $HOME/.bashrc file to set JAVA home directory

$ vi bashrc

c. Set Java home, you can see export JAVA_HOME line in the file been commented using #. Remove # (uncomment it) and you have to key in by giving your Java installed path

d. export JAVA_HOME= c:\\java\\jdk1.6.0_41

(to recognize your windows folder you have give 2 backward slash”\\” for each folder, since I installed java under c:\java\jdk1.6.0_41 in my windows path)

Please Note:

Since you are using Windows you can also edit file through windows explorer whenever you are editing any files inside Cgwin through Windows either with notepad or wordpad, after saving the files in windows ensure you get into Cgywinterminal and locate the file and execute a UNIX command “$ dos2unix <filename>”. This is more important in all stages of execution

e. Exit any terminal and open a new terminal

f. To check the variable is set, type the command in a terminal

$ echo $JAVA_HOME

(The above command will display the java directory path you se or you can also type $ java –version or simply $ java to see execution of java commands in the terminal )

Now you set environment variable in Cygwin ie. JAVA_HOME

Below step by step instruction will help you to setup a single-node Hadoop cluster. Before we move on know about the HDFS (Hadoop Distributed File System) Architecture Guide

b) Download “hadoop-<version>.tar.gz” to your desired directory

c) From the terminal type this command where you download your hadoop-< version>.tar.gz file

$ tar -xvf hadoop-<version>.tar.gz

d) The above command will extract the hadoop files and folder

e) Once you extracted all the files, you may have to edit few configuration files inside <Hadoop Home> directory

Feel free to edit any file through windows with wordpad but don’t forget to execute the UNIX command “$ dos2unix <filename>” for all the files you open up in windows.

f) Now edit <Hadoop Home>/conf/hadoop-env.sh to set Java home as you did it before for environmental variable setup

(Since I already set my JAVA_HOME in .bashrc so I gave JAVA_HOME=$JAVA_HOME)

g) And then update <Hadoop Home>/conf/core-site.xml. with the below xml tag to setup hadoop file system property

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:50000</value>

</property>

</configuration>

h) Now update<Hadoop Home>/conf/ mapred -site.xml with the below xml tag

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:50001</value>

</property>

</configuration>

i) Now update<Hadoop Home>/conf/ hdfs -site.xml with the below xml tag

<configuration>

<property>

<name>dfs.data.dir</name>

<value>/home/<user>/hadoop-dir/datadir</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/home/<user>/hadoop-dir/namedir</value>

</property>

</configuration>

Assume you created your directory in your user profile, so user your user name after /home otherwise you can also check your folder by executing pwd command after you get into your terminal inside your created folder

Assuming create “data” and “name” directory from your home directory, I created a directory hadoop-dir and inside that I have created 2 directories one for name node and other for data node

ensure your data and name directory created and accessed by hadoop, execute the command to change the directory permission by

$ chmod 755 data

as well as

$ chmod 755 name

i.e. if you $ ls -l your directory you data and name directory should be in this mode “drwxr-xr-x” which means owner has three permissions, and group and other have only read and execute permissions

10. HDFS Format

Before starting your cluster you may need to format your HDFS by running the below command from <Hadoop-Home-Dir>/bin

$ ./hadoop namenode -format

11. Copy File to HDFS

To copy local file to HDFS execute this command from <Hadoop-Home-Dir>/bin from the terminal

$ ./hadoop dfs -copyFromLocal <localsrc> URI

Eg: – If I have a sample.txt file in the path /home/<user>/Example

Then I have to executing the command from<Hadoop-Home-Dir>/bin

$ ./hadoop dfs –copyFromLocal /home/<user>/Example/sample.txt /

This command will copy the local fin into HDFS home directory

12. Browse HDFS through web interface

Starting hadoop cluster is by executing a command from <Hadoop-Home-Dir>/bin

$ ./start-all.sh

This will startup a Namenode, Datanode, Jobtracker and a Tasktracker on your machine

To stop the cluster

$ ./stop-all.sh

to stop all the daemons running on your machine.

Browse the web interface for the NameNode and the JobTracker; by default they are available at:

If you face problem running your Cluster, especially with data node daemon not starting up

- Stop the cluster ($./stop-all.sh)

- update the value of namespaceID in your data node eg: <datanode dir>/current/VERSION file to match the value of the current NameNode VERSION file namespaceID

c) Next step is to create an RSA key pair with an empty password. You have to enable SSH access to your local machine with this newly created key.

c) Next step is to create an RSA key pair with an empty password. You have to enable SSH access to your local machine with this newly created key.

Comments on: "Running Hadoop on Cygwin in Windows (Single-Node Cluster)" (33)

Sunder,Looks very easy. Thanks for this article. I am going to try this out. RegardsSabari

Sunder ,Looks so good. Thanks for sharingThanksGandhi G

Sunder, I followed your instructions and setup Hadoop in my laptop. It worked perfectly. Thanks a lot.I really appreciate it. – Prakash

Dear Sunder I have configured in your way and its working .

but when I am running code , its completing map 100 but reduce 0%/

Please I am facing this issue since long time please help me

Dear Rijuvan,

I think you have issues running Hadoop not on the setup. Just try those steps again from beginning or which ever place you are lost. Yous should get it

Rgds

Sunder

I followed you tutorial for installing single node cluster. I lost at 2 points. I would like to have your help at those step.

1.In .bashrc file , export JAVA_HOME was not present. you told that we have to comment it. But since it was not present i though of putting it by my own (Please correct me if i am wrong)

2.When i wrote echo $JAVA_HOME , cygwin terminal was blank……(i guess i mess up at above statement which cause this problem)

3.f) Now edit /conf/hadoop-env.sh to set Java home as you did it before for environmental variable setup

(Since I already set my JAVA_HOME in .bashrc so I gave JAVA_HOME=$JAVA_HOME)

At this step since the value of echo $JAVA_HOME was nothing i though of putting the path once again

4. Assuming create “data” and “name” directory from your home directory, I created a directory hadoop-dir and inside that I have created 2 directories one for name node and other for data node

w.r.t above point i would like to ask where should i see for data and name folder…..

Please….. Help me………. I am stuck… cannot move forward ……………

Search a lot but could not find a relaible solution

Hi Shadabshah,

I think your issues on JAVA env. variable setup. try setting JAVA evn. hope your problem will be solved. repeat the steps you should get it

Rgds

Sunder

Hi Sunder:

My JAVA_HOME is set to C:\jdk1.6.0_12 and my OS is Windows Vista.

As described in you instructions, I installed Cygwin, SSHD and set my RCA. But at the end of your step 5, when I check:

ssh localhost

I got following response:

Connection closed by ::1

If I run start-all.sh and check processes, I can see several processes could not start:

start datanode

localhost: Connection closed by ::1

start secondary namenode

localhost: Connection closed by ::1

start tasktracker

localhost: Connection closed by ::1

So, by using jps, I only can see:

9228 NameNode

7496 JobTracker

6548 Jps

I tried many ways to fix the issue, and failed. Any suggestions? Should I reinstall Cygwin?

Thanks a lot for your instructions and help…

Sherman

Hi Sherman Wang,

Please check from your 2nd step for a successful installation on SSH, repeat the steps you should get it.

Rgds

Sunder

Dear Sundar,

Thanks for taking time to create the wonderful tutorial that helps. I followed your steps, but known_hosts file is not showing up and ssh localhost is refusing connection. Any idea what I may have missed.

$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/jay/.ssh/id_rsa):

Created directory ‘/home/jay/.ssh’.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/jay/.ssh/id_rsa.

Your public key has been saved in /home/jay/.ssh/id_rsa.pub.

The key fingerprint is:

be:2e:6c:d6:12:b4:2b:17:5c:2e:10:99:c0:8c:6e:aa jay@MYCOMPUTER

The key’s randomart image is:

+–[ RSA 2048]—-+

| +.. o |

|. o + |

|. . |

| o . . . |

|o + +S |

|. *.. |

|. . *. |

|E . O .. |

| = +o |

+—————–+

jay@MYCOMPUTER ~

$ cd ~/.ssh

jay@MYCOMPUTER ~/.ssh

$ cat id_rsa.pub >> authorized_keys

jaym@MYCOMPUTER ~/.ssh

$ ssh localhost

ssh: connect to host localhost port 22: Connection refused

jay@MYCOMPUTER ~/.ssh

$ ls -l

total 6

-rw-r–r– 1 Techm None 401 Nov 9 09:06 authorized_keys

-rw——- 1 Techm None 1679 Nov 9 09:05 id_rsa

-rw-r–r– 1 Techm None 401 Nov 9 09:05 id_rsa.pub

great rgds,

jay

Hi Jay,

From the error I could see you have issues with the port. Resolve the port access connectivity and try again.

Really nice tutorial. .sundar all is going well but at step start-all.sh i am getting command not found…..same for stop-all.sh……plz guide me..

Hi Vishwa,

Check the location/path from where you execute this command.

Hi All,

I tried the Cygwin installation, and faced problems. I am on Windows 7, and when you try to give ntsec for the CYGWIN environment variable prompt, I get some warnings and a prompt suggests if I should I use cyg_user. When I gave cyg_user and completed the sshd service, I faced problems. Finally, I removed Cygwin from my machine and installed again, and this time gave the account with which I have logged into my Windows as the CYGWIN environment variable. sshd is working fine now. Currently am downloading the hadoop release.

Regards,

Suresh

Hi Suresh,

Yes, for windows – cygwin, you should be logged as administrator

Hi Sunderc,

Thanks for very nice tutorial. I have tangled for a long time (more then 2 weeks) in setting JAVA-HOME. read all forum didn’t help.

All the setting I have:

.bachrc:

export JAVA_HOME=”C:\\Progra~1\\Java\\jdk1.7.0_51\\”

hadoop-env.sh:

export JAVA_HOME=$JAVA_HOME

JAVA_HOME in win environmental variable :

C:\Progra~1\Java\jdk1.7.0_51\

Note: I replaced “Program Files” with “Progra~1” . it didn’t work anyway

$ java -version gives me answer

java version “1.7.0_51″

Java(TM) SE Runtime Environment (build 1.7.0_51-b13)

Java HotSpot(TM) 64-Bit Server VM (build 24.51-b03, mixed mode)

$ export contains JAVA_HOME=”C:\\Program Files\\Java\\jdk1.7.0_51\\”

But $ echo JAVA_HOME returns JAVA_HOME!!

When I try to call hadoop namenode -format the answer is :

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Error: Could not find or load main class org.apache.hadoop.hdfs.server.namenode.NameNode

The same answer when I try ./hdfs namenode -format

All I found is that the setting for JAVA_HOME is WRONG! I’ve tried any possible combinations but still it’s not work!!

Please help me to find it out where I’m doing something wrong.

Thanks in advance,

Fatemeh

Hi Fatemeh,

Check with the path you configured, this will resolve your issue.

Hi

getting following error.

java.lang.NoClassDefFoundError: org/apache/hadoop/hdfs/server/namenode/NameNode

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hdfs.server.namenode.NameNode

at java.net.URLClassLoader$1.run(Unknown Source)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(Unknown Source)

at java.lang.ClassLoader.loadClass(Unknown Source)

at sun.misc.Launcher$AppClassLoader.loadClass(Unknown Source)

at java.lang.ClassLoader.loadClass(Unknown Source)

Could not find the main class: org.apache.hadoop.hdfs.server.namenode.NameNode. Program will exit.

Exception in thread “main”

This is really frustrating. If anybody resolved, please reply.

Thanks in advance

Akshay – you will learn may, keep trying. check your Java and Hadoop configuration before you try to run your command

Hi Sunder,

Thanks for the article.

I executed all steps successfully, but was stuck with “Error: Could not find or load main class org.apache.hadoop.hdfs.server.namenode.NameNode”, when using following command:

$ ./hdfs namenode -format

I also checked for hadoop classpath to check JARs, everything was OK.

I am using Hadoop 2.4 and java 7

Please help

Thanks,

Manoj

Hi Manoj,

Check your JAVA configuration and the JAVA class path.

Hi,

“$ tar -xvf hadoop-.tar.gz” this command is not work properly

so please give me solution.

i am stuck.

Hi Ketan,

Check your folder permission, try some samples.

first i have download cygwin then installed but not there services(cygwin.dll). please help me how will retrieve.

HI Suresh,

Run your Cygwin installation again with a new cygwin.exe download

Hi team/sundersingh

i am working with hadoop 2.3 in cygwin 64 , i am using hdfs command and it is showing below mentioned error and every thing in the classpath but still it is giving the exception

please help me out sunder gi

step 10

$ ./hdfs namenode -format

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.Shell).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Formatting using clusterid: CID-7dade3f9-a893-464b-90b9-cb990b3e786f

Re-format filesystem in Storage Directory \tmp\hadoop-us\dfs\name ? (Y or N) y

step 11

please look into the exception below

$ hadoop dfs –copyFromLocal /home/us/hadoopfiles/sample.txt

or else

$ ./hdfs dfs

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.Shell).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Exception in thread “main” java.lang.RuntimeException: core-site.xml not found

at org.apache.hadoop.conf.Configuration.loadResource( Configuration.java:2244)

at org.apache.hadoop.conf.Configuration.loadResources (Configuration.java:2172)

at org.apache.hadoop.conf.Configuration.getProps(Conf iguration.java:2089)

at org.apache.hadoop.conf.Configuration.set(Configura tion.java:966)

at org.apache.hadoop.conf.Configuration.set(Configura tion.java:940)

at org.apache.hadoop.conf.Configuration.setBoolean(Co nfiguration.java:1276)

at org.apache.hadoop.util.GenericOptionsParser.proces sGeneralOptions(GenericOptionsParser.java:320)

at org.apache.hadoop.util.GenericOptionsParser.parseG eneralOptions(GenericOptionsParser.java:478)

at org.apache.hadoop.util.GenericOptionsParser. (GenericOptionsParser.java:171)

at org.apache.hadoop.util.GenericOptionsParser. (GenericOptionsParser.java:154)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.j ava:64)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.j ava:84)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:308 )

—————————————————————-

echo $CLASSPATH

:/usr/local/hadoop-2.3.0/share/hadoop/common/hadoop-common-2.3.0.jar:/HADOOP_HOME/share/hadoop/hdfs/hadoop-hdfs-2.3.0.jar:/usr/local/hadoop-2.3.0/CONF:/usr/local/hadoop-2.3.0/share/hadoop/common/lib/*:/usr/local/hadoop-2.3.0/share/hadoop/common/*:/usr/local/hadoop-2.3.0/share/hadoop/hdfs:/usr/local/hadoop-2.3.0/share/hadoop/hdfs/lib/*:/usr/local/hadoop-2.3.0/share/hadoop/hdfs/*:/cygdrive/e/hadoop/hadoop-2.3/hadoop-2.3.0/share/hadoop/yarn/lib/*:/cygdrive/e/hadoop/hadoop-2.3/hadoop-2.3.0/share/hadoop/yarn/*:/usr/local/hadoop-2.3.0/share/hadoop/mapreduce/lib/*:/usr/local/hadoop-2.3.0/share/hadoop/mapreduce/*:/usr/local/hadoop-2.3.0/conf/core-site.xml

with regards

shanmukha sarma

Hi Shanmukha,

Check with your classpath and the .jar files should be placed in root-directory/Source folder

/usr/bin/ssh.exe: error while loading shared libraries: cyggssapi-3.dll: cannot open shared object file: No such file or directory

Check with your Cygwin package, path and root directory

Hi Sunder:

My OS is Windows 8.1 and i follow all the steps as per instructions but at the end of Step 5.b i got the message Cannot connect to port 22: Connection Failure. So what should i do?

Hi Anshul,

Ensure you log in as administrator and you have access to the ports

How to store all data and how to update that Java home in cygwin

Akshay – You may need to explore more in Hadoop & Java.